OpenAI’s text-generating AI chatbot, ChatGPT, took the world by storm when it was publicly launched in 2022.

You want a good caption? Ask ChatGPT.

Need advice? Ask ChatGPT.

Looking for tips on how to write a resignation letter? Ask ChatGPT.

The platform quickly became so mainstream that most users mindlessly reach for it when drawing up everything from business decisions and expansion plans to sorting out far more personal concerns.

However, a new feature may make users think twice.

Recently, over 100,000 private chats were leaked through GPT’s share feature, exposing conversations containing both confidential business information (e.g., plans for upcoming product innovation, marketing plans, customer records, etc.) and even private data from what users thought were private chats.

So, in this article, we’ll look at what and why it happened — and how AI users can protect their business and personal information.

GPT’s Controversial Searchability Feature Explained

It all started when OpenAI added a share button so users can pass on chats quickly.

A developer, for example, could ask ChatGPT for cleaner code and share it with the team. Instead of copy-pasting, he just hits the upward arrow icon, and ChatGPT makes a link he can send – an easier way to spread knowledge or the right prompts and output.

But what if users shared confidential information – without knowing it could reach the wrong people or the whole internet?

Unintended Consequences for Users and Businesses

Here’s another complication: some people treat ChatGPT as a safe space, asking about everything — from relationship advice and family issues to marketing strategies and product development. And for a while, these conversations initially remained within the app.

Unfortunately, not everyone realised that using the share feature would make their chats searchable on Google and visible to anyone online. Many assumed the link would remain private and only accessible to those they shared it with directly.

As a result, many business owners, employees, and individuals ended up at risk of sharing more than they intended.

Why It Sparked Widespread Privacy Concerns

The share feature was designed to make AI-generated conversations more accessible and to prevent the same team from having to figure out prompts when someone already figured out the best way to get desired output – and also so distributed teams could pick up where they left off more easily.

By allowing users to share specific conversations, OpenAI aimed to encourage people to spread:

- Helpful responses;

- Coding solutions;

- Creative prompts;

- Research summaries; and

- Other important matters.

But what OpenAI did not fully consider was that users would see ChatGPT as a personal space — used for everything from writing emails to talking about mental health.

Sometimes, users also shared sensitive details like birthdays, addresses, names, and other private information that shouldn’t be made public. Thus, when news broke that ChatGPT conversations could be found online, users went into full panic mode.

The worry wasn’t just about what had already been leaked, but what could be leaked in the future, especially in the event of breaches, cyberattacks, or hacking.

How GPT’s Searchability Might Put Your Business at Risk

More businesses are now using AI to automate tasks.

With the right prompts, they can cut down on manual work and make their processes faster and more efficient.

However, this also means entering business details like company data, sales figures, management decisions, and other strategic information that may be private and confidential.

Now, how do leaks like those caused by GPT’s controversial searchability feature affect businesses, particularly Down Under?

Data Privacy and Confidentiality Risks

Many employees, even business owners, turn to ChatGPT to:

- Write emails;

- Generate client proposals;

- Create reports; or

- Plan campaigns.

While it’s convenient, this can be risky. It raises concerns not just about originality, but also about accidentally including sensitive or internal data in the chat.

For instance, you might inadvertently include:

- Client names;

- Project timelines;

- Pricing structures;

- Employee details; and

- Log-in credentials.

As a result, internal discussions meant only for the team might end up accessible to competitors, clients, or anyone on the internet who looks up related keywords on a search engine.

Impact on Intellectual Property and Proprietary Information

Aside from privacy and confidentiality, businesses can also face intellectual property (IP) issues, especially when they use ChatGPT for:

- Idea generation;

- Product development input; or

- Early drafts of branding materials.

The Ghibli-fication of photos in early 2025, for example, raised questions about whether AI-generated art is a tribute to its original creators or a copyright violation.

As a result, Western countries like Denmark have started drafting laws to protect their citizens’ likeness – their face, voice, and body – from potential AI misuse.

And Australia might follow suit.

Potential Regulatory and Compliance Issues in Australia

While Australia doesn’t have a specific law on AI abuse yet, it already has rules in place to deal with related data breaches.

Under the Privacy Act of 1988, businesses that collect, use, or store personal data must protect that information. So if client data or other personal details are accidentally leaked through GPT chats, the company could still be held legally responsible.

Moreover, the AU government also has a mandatory rule called the Notifiable Data Breaches (NDB) scheme. It requires businesses to inform affected individuals and the Office of the Australian Information Commissioner (OAIC) if a serious data breach occurs.

Given these measures, Australian businesses must treat AI use with the same caution they apply to other platforms and digital tools. Otherwise, a mistakenly shared conversation could trigger investigations, penalties, and public backlash.

Public Backlash and Industry Reactions

Unsurprisingly, OpenAI faced strong backlash from the public, especially from professionals and business owners.

What was once seen as a powerful productivity tool quickly became a potential privacy risk. Users grew concerned about what they had asked GPT to generate — especially those who had treated the chatbot as a safe space for their personal and professional concerns.

User Concerns Around Personal and Business Data Security

For individuals, the accidental exposure of sensitive information can be problematic as many share their:

- Names;

- Addresses;

- Health issues; or

- Legal concerns.

Meanwhile, businesses are worried about:

- Client data;

- Confidential strategies; and

- Intellectual property.

Either way, the recent fiasco over the searchability feature had many users questioning whether AI tools like ChatGPT are truly safe and reliable.

Response from AI Developers and Regulators

Fortunately, OpenAI and other AI companies were quick to respond.

OpenAI updated the share feature by adding clearer warnings and giving users the option to turn it off completely. They also added privacy settings that let users disable chat history or use temporary chats.

Meanwhile, regulators in Australia and other countries have started paying closer attention, sparking discussions on AI transparency, data protection, and user consent. They’re also considering updates to existing privacy laws to address AI-specific risks.

Steps Businesses Are Taking to Mitigate Risks

On the other hand, businesses are beginning to take AI use more seriously. They have started to acknowledge the importance of clear rules and guidelines on what information should and shouldn’t be shared with AI.

Instead of banning ChatGPT or AI completely, companies are:

- Training staff or virtual assistant not to enter client data, personal details, or business secrets into AI tools;

- Using AI platforms designed for businesses with stronger privacy and data controls;

- Involving legal teams to check contracts and ensure data law compliance; and

- Strengthening cybersecurity to protect devices and prevent security risks.

Can Your GPT History Be Used as Court Evidence?

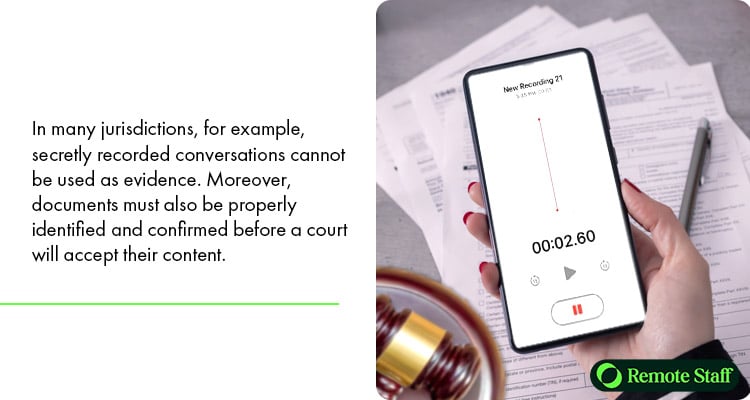

Courts are strict about what counts as evidence.

In many jurisdictions, for example, secretly recorded conversations cannot be used as evidence. Moreover, documents must also be properly identified and confirmed before a court will accept their content.

However, recently, courts in various countries, like the Philippines, have ruled that chat histories can be used as evidence. The same rule applies to Australian courts, subject to certain conditions.

Thus, it begs the question: Do ChatGPT conversations fall into this category?

Legal Precedents Around Digital Communications

To be considered in a decision, digital evidence must be:

- Relevant – The content must be directly related to the case;

- Authentic – It must be proven that the content is genuine and unaltered; and

- Admissible – It must not be illegally obtained or prohibited under the rules of evidence.

In many jurisdictions, chat histories from messaging platforms like WhatsApp, Messenger, and Slack, have already been presented and accepted.

Thus, there’s a strong possibility that AI-generated chats – including those with GPT – can also be used as evidence if properly documented and authenticated.

How Stored AI Chat Data Could Be Subpoenaed

Courts can issue subpoenas — legal orders that require a person to appear in court or provide documents, or face legal consequences.

In the case of GPT, courts can issue subpoenas to legally request users to share their chat history in situations such as:

- Employment Cases – Where an employee used GPT to draft sensitive emails or discuss confidential issues;

- Defamation or Harassment Cases – Where a user may have asked the AI to generate harmful content; or

- Intellectual Property Disputes – Where AI chats document early versions of ideas or inventions.

In these situations, a subpoena could request:

- Screenshots or exports of the chat history from the user;

- Records from the AI platform; and/or

- Testimony verifying that the chat logs are accurate and unaltered.

Implications for Professional or Official Use of GPT Tools

Because of these changes, businesses need to be careful with AI-generated content – even with something as simple as an AI-written email. The risks may include:

- Data Leaks – If an employee enters confidential client data into GPT and that chat is later shared, the company could be held liable for data privacy violations.

- IP Exposure – Using GPT for idea generation could unintentionally leak trade secrets or create disputes about originality.

- Inconsistent Messaging – Left unchecked, AI-generated drafts might contradict with official company policies or public statements.

Best Practices for Businesses Using GPT and Similar AI Tools

Despite the possible risks, AI remains a powerful (and indispensable) tool for improving workflows. However, to ensure efficiency and security, businesses need to take certain precautions.

Internal Guidelines for Safe AI Usage

Companies should set clear, company-wide rules so employees know what’s allowed, what’s risky, and what to avoid when using tools like GPT:

- Data Restrictions – Prohibit the input of:

- Personal data (names, phone numbers, addresses);

- Client information;

- Proprietary strategies; and

- Other confidential content.

- Tool Access – Specify which AI tools are allowed and with what settings (e.g., chat history turned off).

- Approved Use Cases – Clearly define what AI can be used for, such as:

- Content drafting;

- Summarising documents; or

- Language translation.

Conversely, it must also outline what tasks should remain outside AI’s purview, like:

- Legal advice;

- Client communications; or

- Financial forecasting.

- Data Storage – Advise employees to avoid downloading sensitive information to their devices. Instead, these should remain in secure company databases.

- Sharing and Collaboration – Set guidelines on what content can be shared or uploaded on AI tools, whether they come with a search feature or not.

Employee Training on Data Security

In addition to setting guidelines, companies should regularly train employees so they clearly understand and remember the rules:

- The Basics of AI – Explain how tools like GPT work, what happens to the data shared, and the difference between temporary and saved chats.

- Data Classification – Help staff identify what types of data are considered sensitive, confidential, or restricted under laws like the Privacy Act of 1988.

- Case Studies – Share real-world examples of data leaks or lawsuits involving AI misuse and abuse to emphasise the risks and raise awareness.

- Safe Prompting Techniques – Teach employees how to phrase prompts without revealing private or identifying information.

- Incident Reporting – Lastly, provide a clear process for what to do if someone accidentally inputs sensitive data into an AI tool.

Reviewing AI Provider Terms and Privacy Policies

It’s important for businesses to carefully review the terms of service, data use policies, and privacy agreements of the AI provider.

Hence, you should be able to answer these questions when evaluating the tool:

- Does the provider store your inputs or use them to train their models?

- Where is the data stored?

- Can you turn off history or delete past chats?

- Is the provider compliant with data regulations?

- Is there a business or enterprise plan?

It’s also a good idea to check in with your legal team or data protection officer before using AI tools to handle sensitive business information.

Frequently Asked Questions (FAQs)

Here are some of the frequently asked questions about GPT and its security.

#1. Does GPT Keep My Data Forever?

GPT does not keep your data forever by default. However, your data may be stored temporarily or used to improve the model unless you opt out.

If chat history is turned on, your conversations are saved on your account and may be reviewed. OpenAI keeps conversations for a limited time, typically for 30 days, for safety checks. After that, they are deleted unless flagged.

However, you can opt out of this by disabling chat history in settings. This creates “temporary chats” that are not stored in your history or used to train models.

#2. How Can I Delete My GPT History?

You can delete your entire ChatGPT history by following these steps:

- Sign in to your account;

- Click your avatar or username;

- Go to Settings > Data Controls;

- Click “Clear Conversations” or “Delete All Chats”; and

- Confirm by clicking “Confirm deletion.”

If you only want to delete a single conversation, just:

- Open the specific chat in the sidebar;

- Click the “Trash/Delete” icon next to it; and

- Confirm deletion when prompted.

#3. Is Using GPT in the Workplace Legally Safe?

It depends on your usage. It’s generally safe when:

- You’re using GPT for general research;

- No sensitive, personal, or confidential data is shared; and

- You consider company policies and privacy laws.

However, you should avoid:

- Inputting confidential client or employee info or any content covered by NDAs;

- Encoding business strategies, financial info, or product plans;

- Generating content based on copyrighted materials.

#4. What Industries Are Most At Risk From AI Data Issues?

Industries that deal with personal, sensitive, or confidential data face the most risk, since even small data leaks or misuse can cause legal, financial, or reputation problems. These include:

- Healthcare

- Legal Services

- Finance and Banking

- Technology

- Research and Development

- Marketing and Advertising

- Education

- E-Commerce

- Government

Hence, these sectors must approach AI tools with strict safeguards, privacy controls, and targeted training to avoid serious data risks.

Conclusion: Balancing Innovation With Privacy and Legal Awareness

Technology is quickly evolving, and AI tools are only getting better.

So the real question isn’t whether to use AI, but how to use it responsibly – without running into legal, ethical, or privacy problems. Therefore, businesses should see GPT-based tools not just as smart text generators, but as data processors that can store, learn from, and possibly leak the information users provide.

However, instead of fearing or banning AI, the smarter approach is to train responsible and informed users who can use it to improve workflows safely. Read up on current laws and regulations about AI misuse so your business can take proactive steps.

At the end of the day, embracing technology and its innovations offers more benefits – especially since AI is here to stay.

Keep your business up to date with the latest tech with help from our dedicated remote professionals. Call us today or request a callback now.

Syrine is studying law while working as a content writer. When she’s not writing or studying, she engages in tutoring, events planning, and social media browsing. In 2021, she published her book, Stellar Thoughts.