Artificial intelligence is evolving rapidly. It can write essays, create images, and make videos – all you need are the right prompts.

This development has drastically transformed how we work, create, and live. Some users are even using AI to recreate images, generate versions of their favorite characters, or rework official movies and stories for personal use. With many small businesses also using it to compete with large enterprises.

However, this widespread use of AI-generated images has even led many creators to question the ethical and legal implications of creating content based on user data.

Thus, many legal scholars emphasise the need for laws and regulations to keep up, especially with how easy it’s become to feed anyone’s face, body, or voice into image generation models without their consent – or even their knowledge.

And until recently, no country has made much progress in legally addressing these issues.

Here’s how Denmark could very well be the first.

A Legal First in the Fight Against Deepfakes and AI Abuse

It’s hard to create and enforce laws on AI as it evolves so quickly.

While laws take months or years to pass, technology can change in a matter of days or weeks.

That’s why many countries struggle to protect their citizens’ privacy rights. But Denmark is determined to get the ball rolling regardless.

Why Denmark’s Move is a Landmark in Digital Rights

About a week ago, Denmark surprised the world with an unprecedented move to grant its citizens the copyright to their face, body, and voice.

The proposed bill is the first in Europe to give citizens the legal right to ask platforms like Facebook, Instagram, and TikTok to take down fake digital versions of themselves online.

If and when it comes to pass, this groundbreaking law:

- Gives people control how their photos and voices are used (if at all);

- Allows them to request takedowns or sue if their likeness is used without their knowledge or consent;

- Provides a legal solution for AI-generated deepfakes; and

- Sets a global example that could inspire other countries to follow.

Growing Global Scrutiny Towards AI-Generated Content

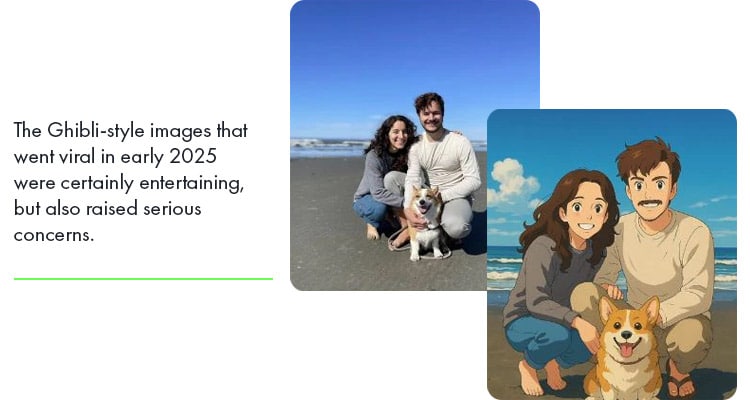

The Ghibli-style images that went viral in early 2025 were certainly entertaining, but also raised serious concerns. As AI gets more advanced, it’s becoming harder to tell what’s real and what’s made by AI – blurring legal, ethical, and moral boundaries in the process.

Some say AI-generated images are just tributes to one’s favorite artists, but many argue that generating such actually violate copyright laws.

Thus, artists and creators like Hayao Miyazaki worry that AI can copy their style or use their work without permission, especially since no country in the world has yet to establish a legal infrastructure with clear guidelines for ethical use.

Scarlett Johansson’s Case: When AI Uses Your Voice Without Consent

Even before AI image generation became mainstream, Hollywood star Scarlett Johansson already publicly condemned the serious threats posed by AI-generated ads. In 2023, she took legal action against an AI app for using her name and likeness in an ad without her permission.

The ad made it look like she endorsed the product, even though she wasn’t involved at all. This case drew global attention to how easily AI can copy someone’s voice or appearance to create convincing fake videos or deepfakes.

It also raises big questions for the public: How can we tell if what we see and hear online is real?

And more importantly, how can we protect ourselves from featuring in fake, AI-generated content?

The Rise of AI Tools in Marketing and Media

Creating captivating marketing and social media content used to take hours or days.

Now, AI makes writing captions, designing layouts, and creating graphics much faster and easier. However, it also raises concerns about authenticity, ethics, and job security.

Popular Use Cases (Social Media, Ads, Influencer Imagery)

AI tools are often used for:

- Social Media – AI is widely used to make eye-catching posts, write engaging captions, and suggest the best times to post. It can also analyse trends and audience behavior to help brands stay relevant.

- Advertising – AI can design personalised ads for specific groups and quickly create different versions to see what works best, saving time and money. It can also write ad copy, suggest keywords, and make visuals that fit a brand’s style.

- Influencer Imagery – In many cases, many influencers and content creators use AI to improve their photos by adjusting lighting, smoothing skin, and creating a “perfect” version of themselves.

Deepfakes, AI Voices, and the Ethical Gray Area

Many people use AI to improve their photos.

However, more and more users are also creating realistic videos or audio clips that look and sound like real people, even if those people never actually said or did those things.

For example, AI-generated deepfakes can spread false information, damage reputations, be used to commit fraud, or feature in explicit content without the subject’s knowledge or consent. Politicians or celebrities can be falsely shown saying or doing things they never did, leading to public confusion and distrust.

These situations create a big ethical gray area. Some say deepfakes are just harmless parodies or art, while others see them as serious threats to privacy, consent, and public trust.

Public Concern Over Consent and Representation

As AI gets more advanced, people are becoming more worried about their image, voice, and personal data being used without their permission.

Since we often upload photos online and leave a digital footprint, many people feel uneasy knowing their faces, voices, or bodies could be used to train AI models.

Aside from consent, AI-generated content also raises concerns about representation. For example, a deepfake might show a public figure making offensive comments about certain groups, making it all too easy to exacerbate the existing problem of fake news proliferating and affecting the average individual’s ability to make well-informed decisions that affect their lives and everyone else’s (e.g., in elections, etc).

Which Individuals Are Most At Risk for AI Abuse?

Improper AI use can affect anyone online, but some groups are more at risk than others. Let’s take a closer look.

Public Figures: Celebrities, Politicians, Influencers

AI tools can easily scrape images, videos, voice clips, and statements of public figures from the internet. This puts celebrities, politicians, and influencers at risk of:

- Voice Cloning and Impersonation – AI can copy their voices to trick people, promote fake products, or spread false recordings.

- Deepfake Videos – AI-generated videos can also show them saying or doing harmful, illegal, or explicit things, which can ruin their reputation and in some cases, erode public trust.

- Image-Based Exploitation – Celebrities and influencers are at high risk of being targeted by AI tools that can be used to create explicit content using their image.

Vulnerable Groups: Minors, Women, and Marginalised Communities

Aside from public figures, the following are also at serious risk:

- Minors – Children and teens spend more time online, making them easy targets for AI-generated deepfakes, identity theft, or online predators out to profit from using their likeness for nefarious purposes.

- Women – Women are often targeted by AI tools that create fake nude photos or videos without their consent. These are then used for harassment, blackmail, or shared on adult sites without permission.

- Marginalised Communities – Members of racial, ethnic, LGBTQ+, and other marginalised groups can also be targeted by AI-generated fake content or hate speech that spread harmful stereotypes.

Everyday People: Social Media Users with Large Public Profiles

Even regular people can be victims of AI abuse. Those with a strong online presence—like content creators, vloggers, or small business owners, are especially at risk of:

- Catfishing and Identity Theft – AI can create fake profiles using someone’s photos or voice, which can lead to scams, catfishing, or damage to their reputation.

- Business Scams – Small business owners and influencers might have their name or photo used to promote products or services without their consent or knowledge.

- Cyberbullying – People with a public following are more likely to be targeted by AI-made memes, fake content, or messages meant to embarrass, provoke, or damage their reputation.

Real-World Harm: What Happens When Your Likeness is Misused

AI abuse may start online, but it can quickly lead to real-world consequences. It can even affect your mental health, relationships, and even your career.

Here are some examples.

Deepfake Pornography and Non-Consensual Imagery

In recent years, female celebrities like Emma Watson, Scarlett Johansson, and Gal Gadot have been targeted by deepfake ads. Their faces were placed on fake explicit videos and shared on adult websites and social media.

Left unaddressed, these manipulated and AI-generated images and videos may result in:

- A loss of privacy, causing fear, anxiety, and a lasting sense of being violated;

- Feeling exposed or watched, which can hurt self-esteem and mental health; and

- Damage to your reputation, affecting your job and relationships.

Voice Cloning for Fraud and Scam Calls

In 2019, a scammer used AI to copy the voice of a German CEO and called the UK branch’s manager, asking him to urgently send a large amount of money. The money was sent, but by the time they found out it was a scam, the funds had already been moved across several countries.

These scams are hard to spot because cloned voices can sound almost exactly like the real person, including their accent and tone. Worse, victims often lose money and may also feel stressed, embarrassed, and unable to trust others.

AI-Powered Catfishing and Social Engineering

Cybersecurity experts found fake LinkedIn profiles using AI-made photos to trick professionals and steal sensitive data. These fake accounts connected with real people and started friendly chats to gain trust.

Once scammers gained trust, they asked for company info, sent dangerous links, or gathered data for bigger attacks like phishing. Victims could lose money, jobs, or private information. Many also end up grappling with serious trust issues moving forward, since the chats seem real and they don’t realize it’s a scam until it’s too late.

Emotional, Professional, and Legal Fallout for Victims

When someone uses your face, voice, or identity without permission, the harm goes beyond feeling embarrassed online. Some people may feel unsafe or like they’re being watched, especially if it keeps happening.

Moreover, even if the content is fake, the damage it inflicts is all too real.

Deepfakes or false posts can ruin someone’s reputation, affect job opportunities, or even cause them to lose their job. While victims may try to fight back, many countries still lack clear laws against AI abuse. And even where rules exist, taking legal action can be costly and slow, ultimately making it difficult for the victims to prevail and achieve justice.

Inside Denmark’s Face, Voice, and Body Copyright Law

Denmark’s proposed legislation seeks to recognise a person’s face, voice, and body as their intellectual property, much like copyrighted music, photos, and written work.

Here’s how they plan to do it.

What the Law Covers and How It Works

Once the law takes effect, a person’s likeness, how they look, sound, and move, will be treated like intellectual property. This means Danish people will be able to:

- Protect Their Faces – No one can use their face in AI-generated images or videos without clear permission.

- Protect Their Voices – Their voice cannot be cloned and used in calls, videos, or advertisements without consent.

- Protect Their Body and Movements – Their body shape, gestures, and movements will also be protected by law if copied using motion capture or AI without their knowledge.

So, if someone uses their likeness without permission, Danish citizens can take legal action, whether the offending party is an individual, a company, or an AI developer.

Moreover, online platforms like social media or video apps may have to remove unauthorised content – or face penalties if they ignore takedown requests.

How It Applies to AI Generators and Deepfake Tech

Denmark’s proposed law will require AI developers and users to get clear permission before making any image, video, or audio that uses someone’s face, voice, or body.

If a tool is made in a way that allows or encourages misuse, like creating fake images of real people without consent, it could face fines, restrictions, or even an outright ban in Denmark.

Penalties and Enforcement—Who’s At Risk?

The following can be held liable under Denmark’s face, voice, and body copyright law:

- Individual Offenders – People who share deepfakes, use AI to copy someone’s voice for scams, or post edited photos or videos of others without permission;

- AI Developers and Platform Owners – Tech companies that create, host, or operate AI tools can be held responsible if their tools are used to make harmful or unauthorised content, or if they don’t set up proper safety measures; or

- Online Platforms and Social Media Sites – Video-sharing sites and social media apps could be penalised if they allow unauthorised content to spread or fail to remove harmful content fast; monitor for repeated abuse; or cooperate with authorities during investigations.

What This Means for Businesses Using AI in Branding and Advertising

Denmark’s move marks a big shift for companies that rely heavily on AI for marketing, branding, and advertising.

While AI offers creative and cost-effective ways to make realistic avatars, voiceovers, and videos, Denmark’s new law sets clear legal boundaries, ones that countries like Australia may soon adopt as well.

Cross-Border Implications for Australian Companies

Australian businesses using AI-generated content for global marketing need to be careful, especially if their ads or materials target audiences in Denmark.

This means you should be extra cautious when:

- Making AI-generated ads, avatars, or videos targeting Danish audiences;

- Using public photos, voices, or movement data without clear permission; or

- Working with AI tools or vendors that lack safety features or proper licenses.

Risks of Unlicensed AI Content Use (Even If AI-Made)

Even if AI generates an image, video, or voice clip entirely from scratch, it can still break the law, especially if it closely looks or sounds like a real person.

This is important to remember because:

- Misusing Likeness Means Legal Trouble – If your AI-made ad uses a face or voice that resembles a real person, even by accident, you could be sued or fined;

- No Excuse for “AI Did It” – Saying “AI made it” won’t protect you from legal responsibility; and

- Global Reach, Global Risk – If your content reaches people in Denmark, you could still be held accountable under Danish law, even if your business is in Australia.

Importance of Transparent Media Sourcing and Permissions

With stricter laws like Denmark’s on the horizon, businesses now need to handle AI-generated content as carefully as traditional media.

Thus, you have to:

- Secure proper consent prior to generating content based on the likeness of real individuals;

- Partner only with ethical AI vendors that have proper licenses and sourcing records in place;

- Keep documents that prove you legally acquired and used all content; and

- Set up internal checks to spot any unintentional likenesses across all collaterals before launching campaigns.

Will More Countries Follow Denmark’s Lead?

Denmark’s approach to AI image generation is pushing other countries to act, especially as AI-generated deepfakes, voice cloning, and other AI content are becoming increasingly more convincing as the real thing – and becoming increasingly involved in harmful and illegal activities.

Overview of EU, US, and AU AI Regulatory Discussions

The European Union (EU) has already passed the Artificial Intelligence Act, the world’s first regulatory framework on artificial intelligence. It mainly targets high-risk AI systems but also includes rules on transparency and consent, especially for AI-generated content.

In contrast, the United States has no single federal law on AI misuse or likeness protection. However, some states like California, Illinois, and Texas have laws covering biometric data, deepfakes, and the right to publicity.

Meanwhile, Australia is still in the early stages of AI regulation law but is already actively considering stronger rules. The AU government is holding consultations with tech companies and legal experts to develop clearer protections against digital impersonation and privacy violations.

Growing Push for AI Accountability Worldwide

As AI becomes more advanced and accessible, many countries are recognising the need to hold people and companies accountable.

This development goes beyond privacy and digital identity protection.

It’s also about rebuilding public trust in digital platforms and making sure innovation is used responsibly. If done right, businesses, developers, and platforms will no longer treat AI content as a legal grey area.

The Role of Consumer Advocacy in Driving Change

As more people become victims of deepfakes and unauthorised use of their identity, consumer advocacy groups play a key role in urging governments to act.

They raise public awareness, calling for stronger laws. They also put pressure on lawmakers through petitions, reports, and media campaigns to push for better protections.

How to Protect Your Business When Using AI

Here are some ways to protect your business when using AI-generated content for marketing, branding, or communication.

Drafting Internal Policies on AI Content Usage

Clear internal policies help ensure consistency, accountability, and compliance across your team. Thus, they should:

- State clearly when and how your team can use AI tools;

- Set rules against generating images or videos using real people’s likenesses without their permission;

- Always get written consent before using someone’s likeness;

- Choose AI tools with strong safety and legal checks;

- Assign someone to review AI content before it’s published;

- Keep a record of where the AI content came from;

- Inform your team about the risks of misusing AI; and

- Have a plan in case someone complains or challenges your content.

Ensure Licensing of Training Data and Outputs

It’s important to make sure the AI tools you use are trained on properly licensed or public domain data. This helps you avoid inadvertently using content taken without permission.

Furthermore, check the AI tool’s terms of service to confirm you’re allowed to use its output for commercial purposes. If you’re using AI-generated music, images, videos, or voiceovers in your brand materials, keep records to show you have the rights to use them.

Best Practices for Consent, Attribution, and Auditing of AI Outputs

Here are some key best practices to ensure legal and ethical use of AI-generated content:

- Always get permission if you’re using real photos or videos to train your AI;

- Use release forms that explain how the content will be used, shared, and stored;

- Give credit to original creators when needed and follow any rules for attribution;

- Don’t claim AI-generated work is fully original if it’s based on existing material; and

- Keep clear records of the AI tools, prompts, and any data you used.

When to Consult Legal Teams or IP Experts

Legal experts can help you check if you have sufficient consent, or if you might be violating personal likeness rights, when your AI tools use data that looks or sounds like real people.

Moreover, whether you’re using third-party AI tools or working with vendors, your legal team should check the terms of service and contracts. This ensures you can legally use the outputs for commercial purposes without violating the copyright for human likeness or privacy laws.

It’s also important to consult your lawyer if you receive takedown requests or legal complaints for using someone’s likeness or copyrighted material. They can help you resolve the issue and reduce your legal risk.

The Legal Gap: Why Legislating AI Abuse Remains a Challenge

Even with the promising progress in Denmark’s legal system, AI abuse remains difficult to police because of several factors. Let’s take a look.

Rapid Tech Advancements Outpacing Legal Frameworks

AI is evolving so quickly that existing laws can’t keep up, leaving people and businesses open to risks.

Current laws on privacy, copyright, and data protection were enacted long before AI tools became capable of replicating human features and speech. As a result, there is still confusion about how these laws apply when AI is involved.

Jurisdictional Challenges in a Borderless Digital Space

There are also concerns about jurisdiction since a country’s laws usually only apply within its borders. But AI content can be made in one place, stored in another, and spread worldwide—making it hard to enforce any one country’s rules.

The absence of global standards lets bad actors take advantage of legal gaps, especially in places with weak or no AI regulations.

Defining “Ownership” of One’s Digital Self

Many countries still don’t have clear laws on whether people legally own their digital identity.

Without clear definitions, it’s hard to protect people’s rights or hold creators of AI-generated content accountable. That’s why Denmark’s move to define what can be copyrighted, like a person’s face, voice, or body, is a big step forward.

While a global agreement on this issue is still a long way off, it’s not a bad place to start by any means.

Balancing Innovation with Protection

Lawmakers must carefully create flexible policies that protect people from AI abuse without holding back ethical innovation.

This means governments, tech leaders, civil society, and global organizations must work together to create rules that keep up with technology while protecting human rights and digital dignity.

FAQ: AI Image Generation and Copyright Laws

Here are some frequently asked questions about AI image generation and copyright laws.

#1. Can I Use AI-Generated Faces for Ads Without Permission?

Under Denmark’s proposed law, using AI-generated faces that resemble real people, even if not based on anyone specific, could lead to legal trouble.

Hence, it’s safer to avoid realistic AI faces in ads unless you have the rights or use clearly fictional designs.

#2. Will Similar Laws Come To Pass in Australia?

As of this writing, Australia does not currently recognise legal rights over a person’s likeness, but that could change soon.

The AU government has already made it a crime to create or share non-consensual sexually explicit deepfakes. So, it’s likely the law could expand to cover AI-generated faces or likenesses used in ads.

#3. How Can I Check if An AI Image Violates Someone’s Rights?

Catching AI abuse can be tricky, but asking these questions can help guide you:

- Does the image look like a real person?

- Was it made using real photos without permission?

- Is it being used in a way that could mislead people?

- Do local laws protect a person’s image or identity?

If the answer to any of these questions is “yes,” it’s safer to use a different image. Moreover, when in doubt, avoid using it, especially in ads, or ask a legal expert for advice.

#4. What Happens if My Ad Uses a Synthetic Face That Resembles a Real Person?

You could face legal trouble if your ad uses an AI-generated face that looks like a real person. This is even more so if your business operates or targets audiences in Denmark.

So, when creating ads for a Danish audience, it’s best to avoid realistic AI images, especially if they’re meant to look like real Danish people. Otherwise, your business could face voice and image copyright issues.

#5. Should I Stop Using AI Tools Entirely?

Not at all. AI can help you work faster, create content, and be more creative. Just remember to use it carefully and make sure you’re not:

- Violating someone’s legal rights;

- Using copyrighted or misleading images; or

- Relying too much on AI without checking the output.

In other words, think of AI as a tool. As such, it’s not a replacement for human judgment, creativity, or decision-making, especially in ads or other similar uses.

Conclusion

As AI tools improve, countries are updating their laws to keep up, just like Denmark. Other places, like Australia, may soon do the same.

Australian businesses shouldn’t wait for lawsuits or penalties to create or implement rules for AI abuse. Instead, it’s best to stay informed, train your teams, and set internal guidelines to ensure your AI use meets both legal and ethical standards.

Whether you’re creating ads, social media content, or product designs, always ensure your AI-generated content doesn’t use people’s faces, voices, or bodies without clear consent. Otherwise, careless or illegal use could lead to public backlash, loss of trust, or even legal trouble.

After all, Denmark’s copyright law is just the beginning.

Syrine is studying law while working as a content writer. When she’s not writing or studying, she engages in tutoring, events planning, and social media browsing. In 2021, she published her book, Stellar Thoughts.